Dealer, sales or any other kind of commissions can be calculated quite easily with the table functions of the .net library finaquant® calcs, as the following example will demonstrate.

In this article we explain:

- Basic transaction-based (sales data) commission scenario

- How commissions can be calculated with the table functions

- How input data can be fetched from external databases

- How periodic commission calculations can be automated

Commission Scenario

- Dealers receive sales commissions proportional to their sales totals for each product group in a quarter.

- Product groups (or pools) can be defined by category or individual products for each dealer.

- The commission scale with tiered rates (class or level scale logic) for each product group (or pool) determines the amount of commissions to be paid.

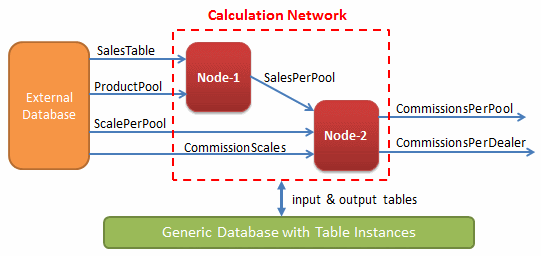

Data Flow & Calculation

All input tables are fetched from an external database using the event functions of Calculation Nodes that are triggered before any input table is read from the Generic Database.

For this demonstration we use a MS SQL database (SQL Express) named externaldb as the external, and another MS SQL database named finaquantdb as the generic database.

After proper configuration, an event function:

- Reads input tables from the external database externaldb

- Filters input tables according to the Calculation Instance parameters. For example, if you want to calculate commissions for the 2. quarter of 2012, a sub-table containing the sales data for only this quarter will be passed to Calculation Node.

- Compares the instance tables in Generic Database finaquantdb (if there already exist) with the filtered inputs from the External Database. It overwrites the instance input tables only if these two set of tables are not identical.

Calculation Nodes are configured such that all input and output table instances are stored in the Generic Database. This configuration is especially useful if you prefer to be able to replicate past calculations.

Generating input tables

Run the demo function WriteTablesToExternalDatabase which generates test tables as mock inputs and stores them in external database.

WriteTablesToExternalDatabase()

// Write test tables into database externaldb for Commission Calculation // Create your own database, and update the connection string before you run this function public static void WriteTablesToExternalDatabase() { DataStore dstore = null; try { // Call helper function to create meta data & test tables MetaData md; MatrixTable SalesTable, ComScaleTable, ScalePoolTable, PoolTable; Create_Sales_And_Commission_Tables(out md, out SalesTable, out ComScaleTable, out ScalePoolTable, out PoolTable); // write test tables to database externaldb // Create your database & update the connection strings below with your values on your computer // you can use a MS SQL or MySQL database // MS SQL SqlClient string connStr1 = @"Data Source=(local)\SQLEXPRESS;Initial Catalog=externaldb;Integrated Security=True;Connect Timeout=15;Encrypt=False;TrustServerCertificate=False"; dstore = new DataStore("System.Data.Odbc"); // MySQL ODBC // string connStr2 = @"Driver={MySQL ODBC 5.2w Driver};Server=127.0.0.1;Database=externaldb;uid=root;pwd=Tosun27"; // dstore = new DataStore("System.Data.SqlClient"); // open database connection dstore.OpenConnection(connStr1); // row partition sales table // NumVector RowInd = NumVector.CreateSequenceVector(0, 1, 500); // SalesTable = SalesTable.PartitionRow(RowInd); // first delete existing tables in external database try { dstore.DropDatabaseTable("sales"); } catch { }; try { dstore.DropDatabaseTable("commission_scales"); } catch { }; try { dstore.DropDatabaseTable("scale_pool"); } catch { }; try { dstore.DropDatabaseTable("pool"); } catch { }; // Sales Table DataTable dt = SalesTable.ToDataTable(); dt.TableName = "sales"; dstore.WriteTable(dt, md); // ComScaleTable dt = ComScaleTable.ToDataTable(); dt.TableName = "commission_scales"; dstore.WriteTable(dt, md); // ScalePoolTable dt = ScalePoolTable.ToDataTable(); dt.TableName = "scale_pool"; dstore.WriteTable(dt, md); // PoolTable dt = PoolTable.ToDataTable(); dt.TableName = "pool"; dstore.WriteTable(dt, md); } catch (Exception ex) { System.Diagnostics.Debug.WriteLine("ERROR in WriteTablesToExternalDatabase: " + ex.Message); } finally { // close database connection dstore.CloseConnection(); } } |

You need to create two databases, one for external (externaldb) and one for generic (finaquantdb), and update the connection strings before you run this function.

Let’s have a look at the input tables.

1. input table: Sales

Transaction data contains sales data for each dealer from 20.10.2011 to 13.03.2013

2. input table: Product Pools

In this table product categories and/or individual products are mapped to a Product Pool for each dealer.

3. input table: Commission Scale Table with tiered rates

For scale_id = 2, apply:

5% for 0 <= sales amount < 500

10% for 500 <= sales amount < 2000

20% for 2000 <= sales amount

4. input table: Pool To Scale

This input table determines which Commission Scale is assigned to each pool, and which scale logic (class or level) is to be applied for each pool.

Now that we have our input tables secured in a database we can go on with table functions to formulate the commission calculation.

Commission Calculation with Table Functions

Commission calculation can be formulated and tested first with table functions only without bothering for Calculation Nodes or any database connection. All these operations are done with in-memory tables.

CalculateCommissions()

// Calculate Commissions with Table Functions without database connection public static void CalculateCommissions() { // calculation parameters string period = "quarter"; // or monthly // create meta data & test tables MetaData md; MatrixTable SalesTable, ComScaleTable, ScalePoolTable, PoolTable; Create_Sales_And_Commission_Tables(out md, out SalesTable, out ComScaleTable, out ScalePoolTable, out PoolTable); // define date-related attributes md.AddNewField("year", FieldType.IntegerAttribute); md.AddNewField("quarter", FieldType.IntegerAttribute); md.AddNewField("month", FieldType.IntegerAttribute); // define required key figures md.AddNewField("pooled_sales", FieldType.KeyFigure); md.AddNewField("interval_rate", FieldType.KeyFigure); md.AddNewField("effective_rate", FieldType.KeyFigure); md.AddNewField("commission", FieldType.KeyFigure); // add date-related attributes to SalesTable // add year to table MatrixTable SalesTableWithYear = MatrixTable.InsertDateRelatedAttribute(SalesTable, "date", "year", DateRelation.Year); // add quarter to table MatrixTable SalesTableWithQuarter = MatrixTable.InsertDateRelatedAttribute(SalesTableWithYear, "date", "quarter", DateRelation.Quarter); TextVector RefAttrib = TextVector.CreateVectorWithElements("dealer", "quarter", "year", "pool_id"); // view table MatrixTable.View_MatrixTable(SalesTableWithQuarter, "SalesTableWithQuarter"); // add attribute pool_id to table MatrixTable SalesTableWithPool = MatrixTable.CombineTables(SalesTableWithQuarter, PoolTable, JokerMatchesAllvalues: true); // view table MatrixTable.View_MatrixTable(SalesTableWithPool, "SalesTableWithPool"); // aggregate sales table w.r.t. pool TextVector SubTableFields = TextVector.CreateVectorWithElements("dealer", "year", period, "pool_id", "sales"); SalesTableWithPool = SalesTableWithPool.PartitionColumn(SubTableFields); MatrixTable AggregatedSalesTableWithPool = SalesTableWithPool.AggregateAllKeyFigures(null); // view table MatrixTable.View_MatrixTable(AggregatedSalesTableWithPool, "AggregatedSalesTableWithPool"); // add attributes scale_id & scale_logic to AggregatedSalesTableWithPool MatrixTable AggregatedSalesWithScale = MatrixTable.CombineTables(AggregatedSalesTableWithPool, ScalePoolTable, JokerMatchesAllvalues: true); // view table MatrixTable.View_MatrixTable(AggregatedSalesWithScale, "AggregatedSalesWithScale"); // get scale table for each scale_id SubTableFields = TextVector.CreateVectorWithElements("lower_limit", "commission_rate"); MatrixTable UniqueScaleIds; MatrixTable[] ScaleTables; MatrixTable.GetSubTables(ComScaleTable, SubTableFields, out UniqueScaleIds, out ScaleTables); // Table array indexed with a dictionary (assoc array indexed with scale_id) var ScaleTableDic = new Dictionary<int, MatrixTable>(); for (int i = 0; i < UniqueScaleIds.RowCount; i++) { ScaleTableDic[(int)UniqueScaleIds.GetFieldValue("scale_id", i)] = ScaleTables[i]; } // insert new key figures to table AggregatedSalesWithScale = MatrixTable.InsertNewColumn(AggregatedSalesWithScale, "interval_rate", 0.0); AggregatedSalesWithScale = MatrixTable.InsertNewColumn(AggregatedSalesWithScale, "effective_rate", 0.0); AggregatedSalesWithScale = MatrixTable.InsertNewColumn(AggregatedSalesWithScale, "commission", 0.0); // row-by-row processing of table for calculating commission rates var TextAttribDic = new Dictionary<string, string>(); var NumAttribDic = new Dictionary<string, int>(); var KeyFigDic = new Dictionary<string, double>(); MatrixTable CommissionsPerPool = MatrixTable.TransformRowsDic(AggregatedSalesWithScale, CalCommissionForEachRow, ScaleTableDic); // view table MatrixTable.View_MatrixTable(CommissionsPerPool, "CommissionsPerPool"); // calculate total commission per dealer for each period (quarter or month) SubTableFields = TextVector.CreateVectorWithElements("dealer", "year", period, "sales", "commission"); MatrixTable CommissionsPerDealer = CommissionsPerPool.PartitionColumn(SubTableFields); CommissionsPerDealer = CommissionsPerDealer.AggregateAllKeyFigures(null); // round sales & commission amounts to two digits after decimal point CommissionsPerDealer = MatrixTable.Round(CommissionsPerDealer, 2); // view table MatrixTable.View_MatrixTable(CommissionsPerDealer, "CommissionsPerDealer"); // round commission amounts MatrixTable.Round(CommissionsPerDealer, 2); } |

The C# method CalculateCommissions with table functions above delivers following output tables:

|

|

The calculation works as expected. In the next step the whole formula can be broken down to manageable smaller formulas, and each formula (i.e. high-level table function) is assigned to a particular Calculation Node in the Network.

Configuring Calculation Network & Nodes for automated data retrieval, calculation & storage

First, define all required table fields (meta data) and initiate Calculation Network:

Define Meta Data// define meta data var md = MetaData.CreateEmptyMetaData(); // attributes MetaData.AddNewField(md, "category", FieldType.TextAttribute); MetaData.AddNewField(md, "product", FieldType.TextAttribute); MetaData.AddNewField(md, "dealer", FieldType.TextAttribute); MetaData.AddNewField(md, "date", FieldType.DateAttribute); MetaData.AddNewField(md, "scale_id", FieldType.IntegerAttribute); // commission scale id MetaData.AddNewField(md, "pool_id", FieldType.IntegerAttribute); MetaData.AddNewField(md, "scale_logic", FieldType.TextAttribute); // level or class // date-related attributes MetaData.AddNewField(md, "year", FieldType.IntegerAttribute); MetaData.AddNewField(md, "quarter", FieldType.IntegerAttribute); MetaData.AddNewField(md, "month", FieldType.IntegerAttribute); // key figures MetaData.AddNewField(md, "lower_limit", FieldType.KeyFigure); MetaData.AddNewField(md, "commission_rate", FieldType.KeyFigure); MetaData.AddNewField(md, "sales", FieldType.KeyFigure); MetaData.AddNewField(md, "interval_rate", FieldType.KeyFigure); MetaData.AddNewField(md, "effective_rate", FieldType.KeyFigure); MetaData.AddNewField(md, "commission", FieldType.KeyFigure); |

Initiate Calculation Network

// Initiate Calculation Network Network nw = new Network("ComNW"); // database connection string; replace it with your own connection string string connStr; connStr = @"Data Source=(local)\SQLEXPRESS;Initial Catalog=finaquantdb;Integrated Security=True;Connect Timeout=15;Encrypt=False;TrustServerCertificate=False"; // connStr = @"Driver={MySQL ODBC 5.2w Driver};Server=127.0.0.1;Database=finaquant;uid=root;pwd=Tosun27"; // data provider string DataProvider: DataProvider = "System.Data.SqlClient"; // DataProvider = "System.Data.Odbc"; // MySQL // initiate DataStore DataStore dstore = new DataStore(DataProvider); // Assign a DataStore to Network for connection to Generic Database nw.DataStore_Network = dstore; nw.ConnectionString = connStr[dbselect]; // Database table for storing log table instances nw.Set_OutputSink_LogTable("comnw_log"); |

Next step is configuring the behavior of Calculation Nodes: How they read and validate input data, which events are triggered at what time points, how the input and output table instances are stored, and so on.

Configure 1. Calculation Node with 2 input and 1 output tables

Configuration summary:

- Event functions

ReadSalesandReadProductPoolare fired before reading the input tables from the Generic Database. - These event functions read data from the external database and write them to Generic Database, unless external tables are exactly identical to the table instances already stored in Generic Database.

- Calculation Node reads its input tables from the Generic Database, applies the assigned validation rules and function, executes the table function of the node

CalcAggregateSalesand generates resultant output table SalesPerPool. This output table is stored in the Generic Database.

// Calculation Node with 2 input (sales, product_pool) and 1 output (aggregated_sales) tables Node node1 = new Node(NodeFunc: NodeFunc_CalcAggregatedSalesPerPool, InputCount: 2, OutputCount: 1, NodeName: "CalcAggregatedSales"); // add input sources node1.Add_InputSource(TableName: "sales", NodeName: "CalcAggregatedSales"); // 1. table node1.Add_InputSource(TableName: "product_pool", NodeName: "CalcAggregatedSales"); // 2. table // register custom functions to event in order fetch data from external data stores // ... and store input table instances in generic database finaquantdb node1.BeforeReadingInputs += ReadSalesTable_EventFunc; node1.BeforeReadingInputs += ReadProductPoolTable_EventFunc; // input validation node1.Set_AllInputs_IfNullPermitted(false); // null-valued input tables are not permitted // validation for 1. input: Sales Table node1.Set_Input_ValidationParameters(InputIndex: 0, IfNullPermitted: false, MinColumnCount: 3, MinRowCount: 1, ContainsFields: TextVector.CreateVectorWithElements("dealer", "date", "sales")); // validation for 2. input: Pool Table node1.Set_Input_ValidationParameters(InputIndex: 1, IfNullPermitted: false, MinColumnCount: 2, MinRowCount: 1, ContainsFields: TextVector.CreateVectorWithElements("pool_id", "dealer")); // custom validation function for all inputs // checks if a pool_id is defined for each dealer in sales table node1.AllInputs_ValidationFunc = CheckSalesAndPoolTables; // store output table instance in generic database node1.Add_OutputSink("aggregated_sales"); // store log table instance node1.Set_OutputSink_LogTable("node1_log"); // Add Node to Network nw.AddNode(node1, UseNetworkDataStore: true, AssignStdNodeName: false); |

Configure 2. Calculation Node with 3 input and 2 output tables

Configuration summary:

- Event functions

ReadScalePoolTableandReadComScaleTableare triggered before reading the input tables from the Generic Database. - These event functions read data from the external database and write them to Generic Database, unless the external tables are exactly identical to the table instances already stored in Generic Database.

- Second Node reads its first input table directly from the first Node (in-memory); not from the Generic Database.

- 2. and 3. input tables, PoolToScale and CommissionScales, are read from the Generic Database.

- Both output tables are stored in the Generic Database.

// Calculation Node with 3 input (aggregated_sales, scale_pool, commission_scales) // and 2 output (commissions_per_pool, commissions_per_dealer) tables Node node2 = new Node(NodeFunc: NodeFunc_CalcCommissionsPerAgent, InputCount: 3, OutputCount: 2, NodeName: "CalcCommissions"); // add input sources node2.Add_InputSource(FeederNode: nw[0], OutputIndex: 0); // direct output from 1. node node2.Add_InputSource(TableName: "scale_pool", NodeName: "CalcCommissions"); // instance table node2.Add_InputSource(TableName: "commission_scales", NodeName: "CalcCommissions"); // instance table // register custom functions to event in order fetch data from external data stores // ... and store input table instances in generic database finaquantdb node2.BeforeReadingInputs += ReadScalePoolTable_EventFunc; node2.BeforeReadingInputs += ReadComScaleTable_EventFunc; // input validation node2.Set_AllInputs_IfNullPermitted(false); // null-valued input tables are not permitted // validation for 1. input: AggregatedSalesTable node2.Set_Input_ValidationParameters(InputIndex: 0, IfNullPermitted: false, MinColumnCount: 5, MinRowCount: 1, ContainsFields: TextVector.CreateVectorWithElements("dealer", "pool_id", "year", "quarter", "sales")); // validation for 2. input: Pool Table node2.Set_Input_ValidationParameters(InputIndex: 1, IfNullPermitted: false, MinColumnCount: 3, MinRowCount: 1, ContainsFields: TextVector.CreateVectorWithElements("scale_logic", "pool_id", "scale_id")); // validation for 3. input: Com. Scale Table node2.Set_Input_ValidationParameters(InputIndex: 2, IfNullPermitted: false, MinColumnCount: 3, MinRowCount: 1, ContainsFields: TextVector.CreateVectorWithElements("commission_rate", "lower_limit", "scale_id")); // no custom validation function for all inputs // store output table instance in generic database node2.Add_OutputSink("commissions_per_pool"); node2.Add_OutputSink("commissions_per_dealer"); // store log table instance node2.Set_OutputSink_LogTable("node2_log"); // get execution timestamps from log table node2.IfGetTimeStampsFromLogTable = false; // Add Node to Network nw.AddNode(node2, UseNetworkDataStore: true, AssignStdNodeName: false); |

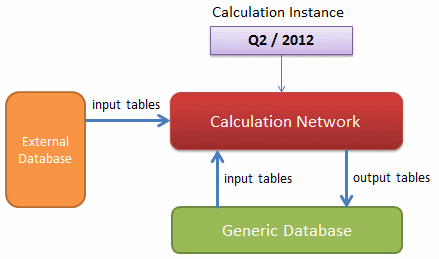

Now that both Nodes are configured our Calculation Network is ready for execution. But first, a Calculatio Instance needs to be created:

Calculation Instance: 2. quarter of 2012

// Create Calculation Instance var RowFields = TextVector.CreateVectorWithElements("quarter", "year"); var InstanceTblFields = TableFields.CreateTableFields(RowFields, md); TableRow CalcInstance = TableRow.CreateTableRowWithElements(InstanceTblFields, 2, 2012); |

Execute Calculation Network

Calculate Sales Commissions for the given Calculation Instance. As an example, for the 2nd quarter of 2012.

// execute calculation network MatrixTable LogTable; nw.ExecuteNetwork(CalcInstance, out LogTable); |

If you want to calculate the commissions for another period, you just need to update the Calculation Instance, and re-execute the Network.

Once executed, the Calculation Network will produce following output tables:

Log Table of Calculation Network

// view LogTable MatrixTable.View_MatrixTable(LogTable, "LogTable of Calculation Network"); |

Aggregated Sales per Product Pool

// view Aggregated Sales (output of first node) MatrixTable.View_MatrixTable(nw[0].ReadOutput_from_Database(OutputIndex: 0, CalcInstance: CalcInstance), "Output(0,0) AggregatedSalesTable"); |

Sales Commissions per Product Pool

And finally:

Sales Commissions per Dealer

Conclusions

In this article we demonstrated a simple but extendable Commission Calculation.

The same approach can be applied for other kind of table computations in areas like Business Intelligence (Planning & Reporting), Marketing Analytics, Performance Analytics, Risk Analytics, and so on. The suggested steps are the following ones:

- Describe the calculation scenario with its input and output tables. Prepare input tables and expected outcomes to test the results.

- Formulate calculations first with table functions only, without Calculation Nodes & Network, or any database connection. If necessary, database connection can only be used to feed in the input data.

- If the table functions work as expected, break the big formula down into smaller formulas and distribute them to Calculation Nodes considering factors like transparency & easy overview, testability, reusability, scalability and performance.

- Configure the Calculation Nodes, and add them to Network.

- Execute the Calculation Network for multiple calculation instances, and check the results.

Periodic calculations can be automated by triggering the node execution once or multiple times in each period using a .net timer, or a workflow manager for scheduled tasks.

Follow us at our Facebook and/or Google+ pages for more up-to-date product information.

Copyright secured by Digiprove © 2013 Tunc Ali Kütükcüoglu

Copyright secured by Digiprove © 2013 Tunc Ali Kütükcüoglu